If you’re in IT, you probably remember the first time you walked into a real data center—not just a server closet, but an actual raised-floor data center, where the door wooshes open in a blast of cold air and noise and you’re confronted with rows and rows of racks, monolithic and gray, stuffed full of servers with cooling fans screaming and blinkenlights blinking like mad. The data center is where the cool stuff is—the pizza boxes, the blade servers, the NASes and the SANs. Some of its residents are more exotic—the Big Iron in all its massive forms, from Z-series to Superdome and all points in between.

For decades, data centers have been the beating hearts of many businesses—the fortified secret rooms where huge amounts of capital sit, busily transforming electricity into revenue. And they’re sometimes a place for IT to hide, too—it’s kind of a standing joke that whenever a user you don’t want to see is stalking around the IT floor, your best bet to avoid contact is just to badge into the data center and wait for them to go away. (But, uh, I never did that ever. I promise.)

But the last few years have seen a massive shift in the relationship between companies and their data—and the places where that data lives. Sure, it’s always convenient to own your own servers and storage, but why tie up all that capital when you don’t have to? Why not just go to the cloud buffet and pay for what you want to eat and nothing more?

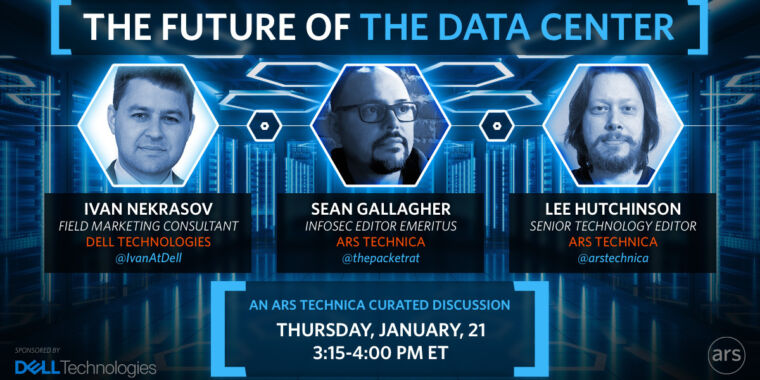

There will always be some reason for some companies to have data centers—the cloud, for all its attractiveness, can’t quite do everything. (Not yet, at least.) But the list of objections to going off-premises for your computing needs is rapidly shrinking—and we’re going to talk a bit about what comes next.

The event has concluded! Thanks for everyone who contributed questions.